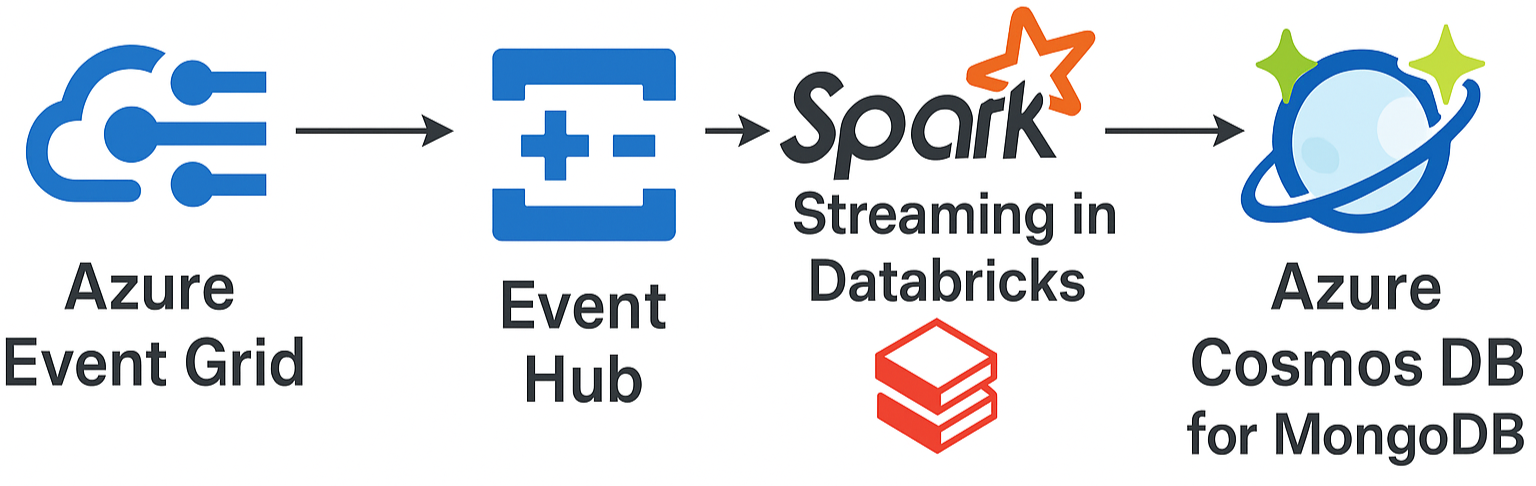

After experimenting with streaming on platforms like Apache Kafka and AWS Kinesis, I wanted to explore real-time data processing in the Azure ecosystem. So, I built a complete streaming solution from scratch—integrating multiple Azure services with Databricks and Spark Structured Streaming.

⚙️ Step-by-step Breakdown

- Step 1: Created an Azure Event Grid Topic

- Step 2: Subscribed the Event Grid to an Azure Event Hub

- Step 3: Sent test events to Event Grid using a

curlcommand which gets passed on to Azure Event Hub - Step 4: Used Apache Spark Structured Streaming in Databricks to consume events from the Event Hub

- Step 5: Stored the transformed results in Azure Cosmos DB for MongoDB

Architecture: Real-time streaming from Azure Event Grid to Cosmos DB via Spark in Databricks

This end-to-end pipeline demonstrated how flexible and robust Azure’s streaming architecture can be, especially when combined with Databricks and Spark.

📂 Full Project & Resources

Everything—from the setup steps to Spark code and architectural decisions—is documented in this GitHub repository:

📌 Why This Matters

Real-time streaming is transforming industries—from fraud detection to IoT, marketing analytics, and supply chain optimization. This project is a great starting point for teams looking to leverage Azure-native tools to build scalable pipelines.

🤝 Always open to collaborating or brainstorming ideas. Feel free to connect or drop a message!

#DataEngineering #Azure #Databricks #ApacheSpark #RealTimeStreaming #StreamingAnalytics #AzureEventHub #CosmosDB #MongoDB #CloudSolutions #BigData #GitHubProjects #LearningByDoing